Identifying .onion websites can be problematic, especially if they’re “member only” or not posted about on frequently used Internet locations, such as 4Chan or Reddit. Now, there is the Hunchly report which you can subscribe to at http://hunch.ly/darkweb-osint/ but with myself being unsure how that report identifies new .onion sites, I was tinkering with a way to attempt to identify sites myself.

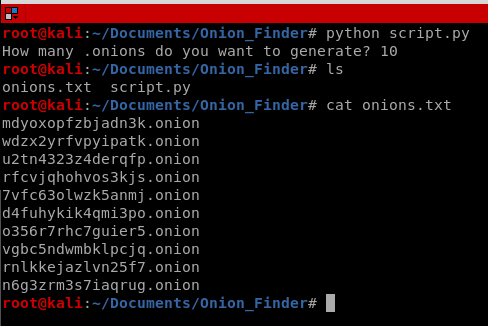

Onion addresses are the first 16 characters of the public key associated with the .onion site, consisting of the lowercase letters a through z and the numbers 2 through 7. Granted, there are some .onion sites that utilize longer addresses but for now these aren’t the norm. Thus, we can create a short Python script to generate random .onion sites we can later lookup.

import random, string

file = open("onions.txt", "w")

times = input("How many .onions do you want to generate? ")

for i in range(times):

x = ''.join(random.choice('234567abcdefghijklmnopqrstuvwxyz') for i in range(16))

y = x + ".onion"

file.write(y)

file.write("\n")

file.close()

Now that we have a script that will create .onion sites, we need a simple way to determine if the site is up. Since Tor does not support the ICMP protocol, we can’t simply ping the site. We can use the CURL command, however, and get a HTTP status code that will tell us if the website is up, or is being redirected, or simply doesn’t exist.

To test this we’ll use the New York Times .onion address: https://www.nytimes3xbfgragh.onion/

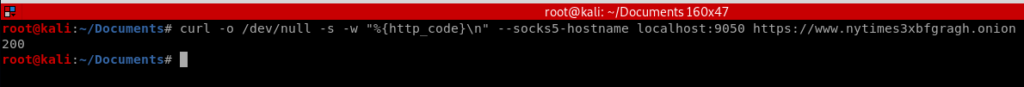

We can then use the following curl command: curl -o /dev/null -s -w “%{http_code}\n” –socks5-hostname localhost:9050 https://www.nytimes3xbfgragh.onion

The command has the following options:

- -o to specify an output location (in our case /dev/null because we don’t want to save it)

- -s to run in silent mode, don’t tell us error messages and the like

- -w to write information to standard out

- –socks5-hostname to point to the proxy we’re utilizing for Tor

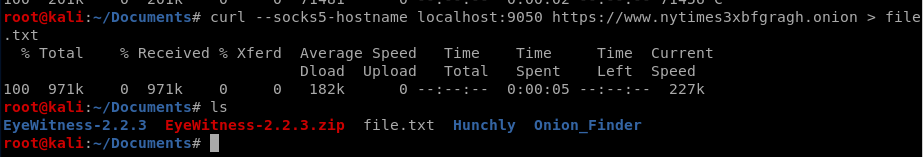

To break down the command without the -o, -w, or -s options, our results look like this:

And that’s only part of it. So let’s do a curl and re-direct the output to a .txt file: curl –socks5-hostname localhost:9050 https://www.nytimes3xbfgragh.onion > file.txt

Now, we’d think we might be able to grep for “http_code” or something similar from the resulting file, but the status code we’re looking for isn’t stored within there. So yea, the previous step was pretty pointless. What we want is the http response code that is received, which wouldn’t be stored within the .html file itself. That’s why we need the -w option with the -w “%{http_code}\n” More information on this option can be found in the manpage for curl: https://curl.haxx.se/docs/manpage.html

Thus, when we run our code we get this:

A beautiful HTTP status code of 200, which according to this page means we are good to go.

Now, to only combine the script with the curl command…..

Update:

So, putting commands like curl into Python scripts is not ideal, because there are libraries for that, like the Requests library: https://requests.readthedocs.io/en/master/

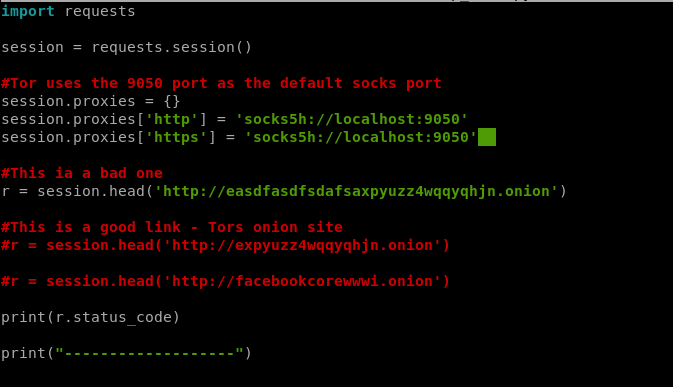

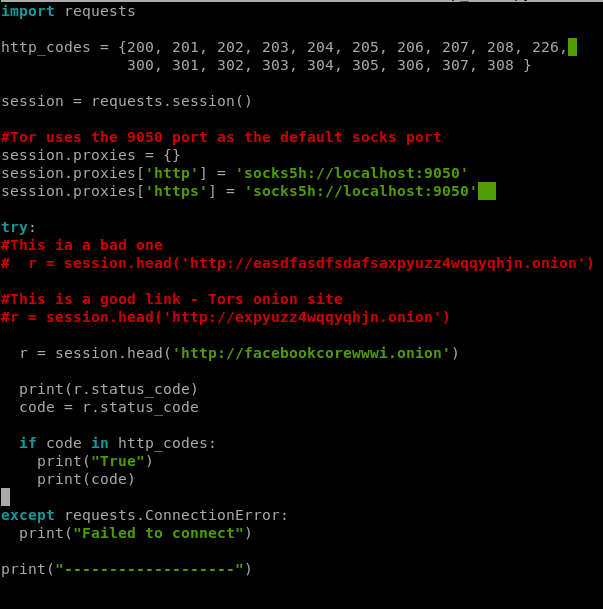

We’re going to start by writing a new script for now, one that just gets the HTTP status code for .onion sites. We’ll use some .onion sites that we know work, like Facebook and Tor, as well as one we made up to make sure we’re good to go. Let’s start with this code:

import requests

sessions = requests.session()

#Tor uses the 9050 port as the default Socks port

session.proxies = {}

session.proxies['http'] = 'socks5://localhost:9050'

session.proxies['https] = 'socks5://localhost:9050'

r = session.head('http://facebookcorewwwi.onion')

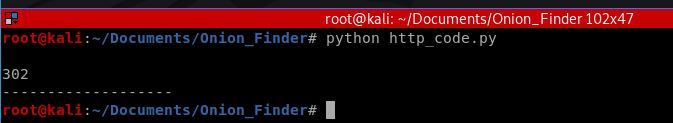

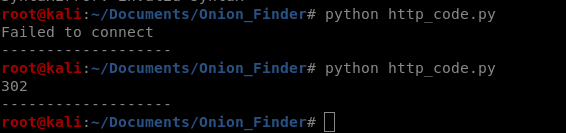

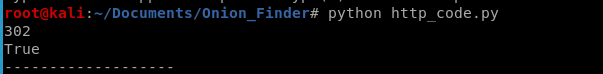

print(r.status_code)And when run, looks like this:

So we now have some code that will utilize the SOCKS5 proxy to request the HTTP status code from a .onion website, and in this case it returned us 302, which means “Found”. Sweet!

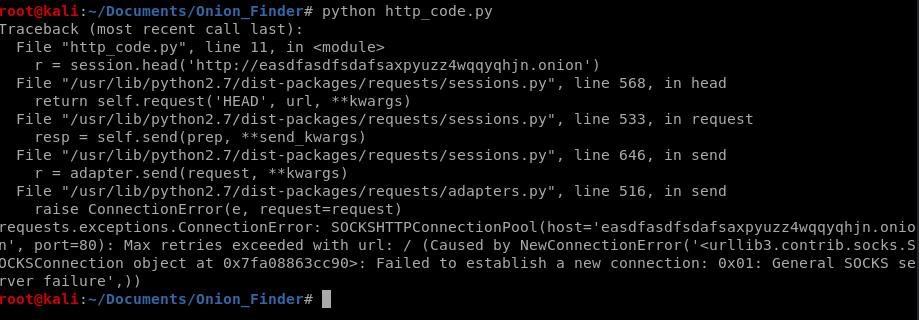

Now, let’s test a “bad” site and see what happens. I’ll use a .onion I made up by mashing the keyboard.

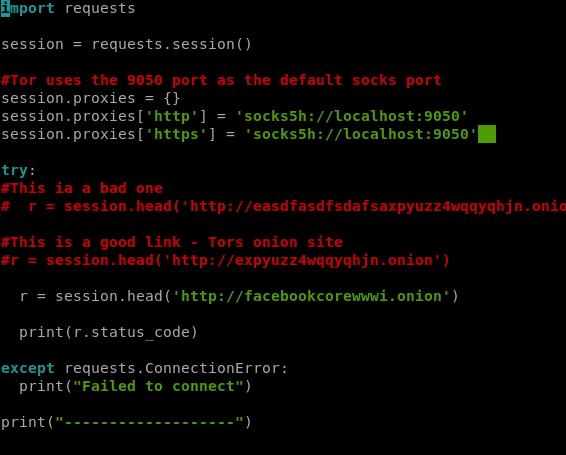

And we get a weird error. Which I’d expect, but I’d like it to be handled more gracefully. So let’s do that with try and except.

And we see in the results above I tried it twice, once with a bad link and once with a good one, and got the results I expected.

Now, we need to start putting everything all together. Ideally, we want our end result to generate a .onion site, see if it’s live, and if it is save those results to a document of some type. In this case, probably a CSV. But first, let’s figure out which HTTP codes we care about.

We can do this with a set in Python. So our set is going to look like this

http_codes = {200, 201, 202, 203, 204, 205, 206, 207, 208, 226,

300, 301, 302, 303, 304, 305, 306, 307, 308}Now, there might be a more efficient way to do it, but that’s what I’m starting with. Next, we add an if statement.

if code in http_codes:

print("True")

print(code)

And this should tell us if our HTTP code is in a list of codes that are indicative of a website being up. Rock on!

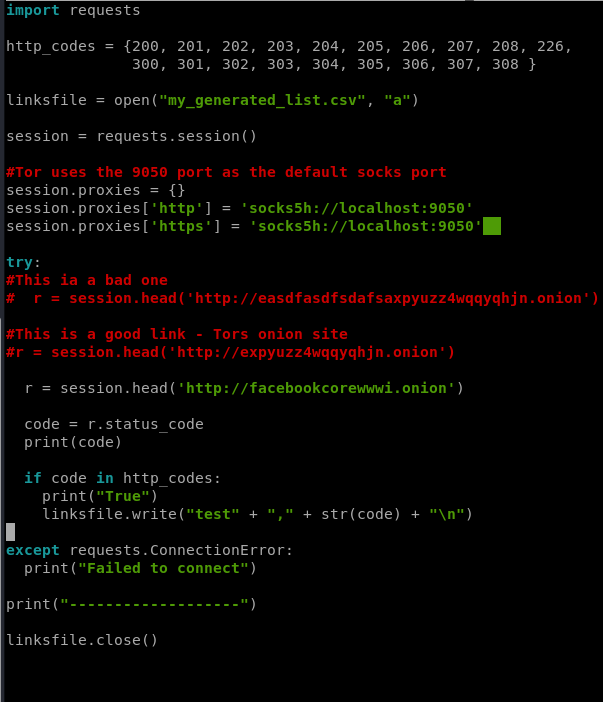

Next, we need to write our results to some kind of file. Let’s use a .csv, so create one called my_generated_list.csv

We’ll use the standard Python practice of opening it up in append mode…

linksfile = open("my_generated_list.csv", "a")

…and then writing the information we need to the file…

linksfile.write("test" + "," + str(code) + "\n"I used “test” here as a place holder until we fill it with our randomly generated URL. Thus, our finished code looks like this.

Yes, I realize I have some print statements that I don’t need in there right now but I’ll remove them later. And I should probably put some functions in here…but I’ll worry about that later. Or now…

Let’s start putting our random generator in…with a function!